Your forever SAST tool is here

AppSec tools are the interface for every false positive closed, every triage note written, and every "not exploitable" comment left by a developer. These are valuable interactions with signals you’d expect a smart system to learn from, or even be designed around. Yet most security tools treat this information as output, not input - useful only to the extent that it might be exported as a PDF for some report or audit trail.

Today, we're excited to announce the general availability of Assistant Memories: a feature that lets Semgrep continuously learn and codify the security relevant context in your organization - no custom rules required.

With Memories, Semgrep turns manual triage into customization, and developer feedback into reusable context. The result? An AppSec platform that tailors itself to your environment, and makes zero false positive SAST a very real possibility.

Context Is Everything

Every SAST tool generates false positives because exploitability depends on context—things like organizational sanitizers, user exposure, and framework-specific protections that static analysis can’t easily account for.

The perfect security tool wouldn’t just flag potentially risky code; it would use the same context your developers do to determine if a finding is actually exploitable. Semgrep Assistant makes this a reality by combining our industry-leading SAST with prompting and retrieval systems that allow AI to reason about code like a security engineer.

A quick refresher on Semgrep Assistant:

Never triage the same issue twice

Unlike generic AI that applies one-size-fits-all reasoning, Memories let Assistant store and reference contextual information about your environment to make decisions.

Semgrep automatically suggests and scopes memories based on triage activity, developer feedback, and more. Users can also manually create memories and scope them by project, rule, or vuln-class.

One Fortune 500 customer saw an additional 2.8x improvement in FP detection on top of Assistant’s baseline noise reduction with just 2 added memories.

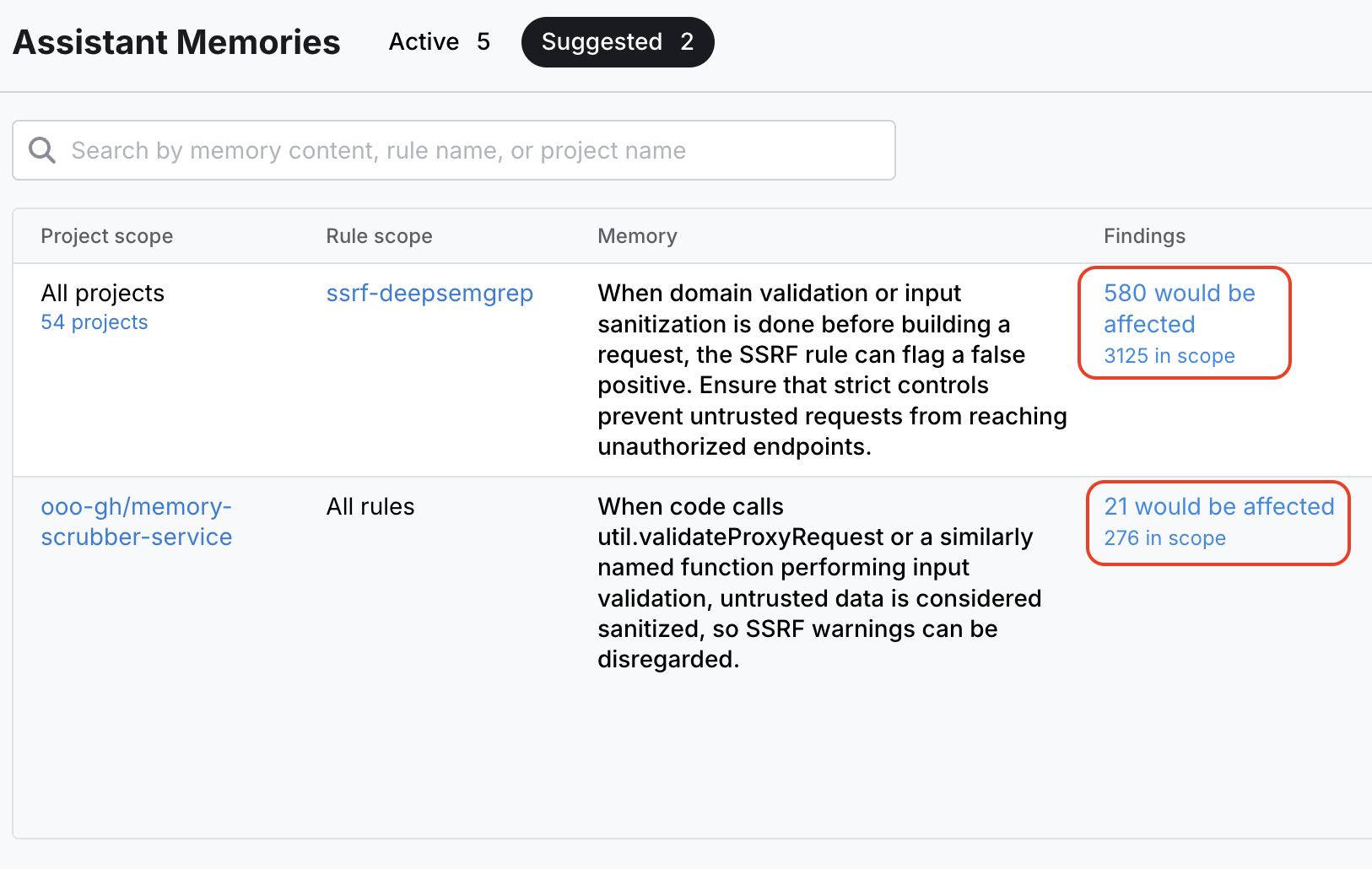

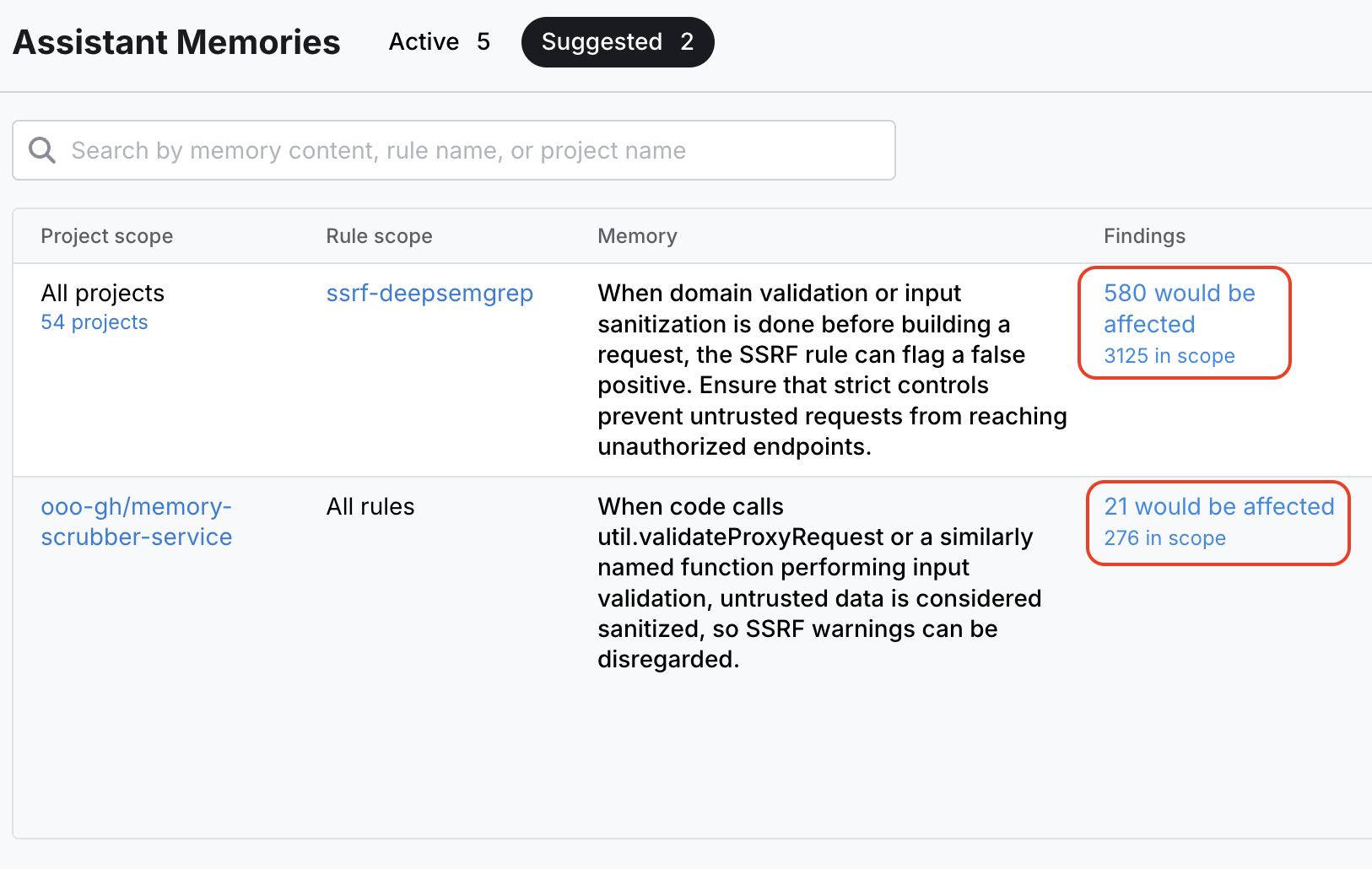

In the example below, Semgrep suggests a memory scoped to a single rule that returned over 3000 findings. By turning the memory on, 580 findings are immediately identified as clear false positives:

Assistant automatically suggests memories based on past triage decisions and developer feedback. Admins can preview the potential impact of each suggested memory and turn them on with the click of a button. Memories can be scoped by project, rule, and vuln-class.

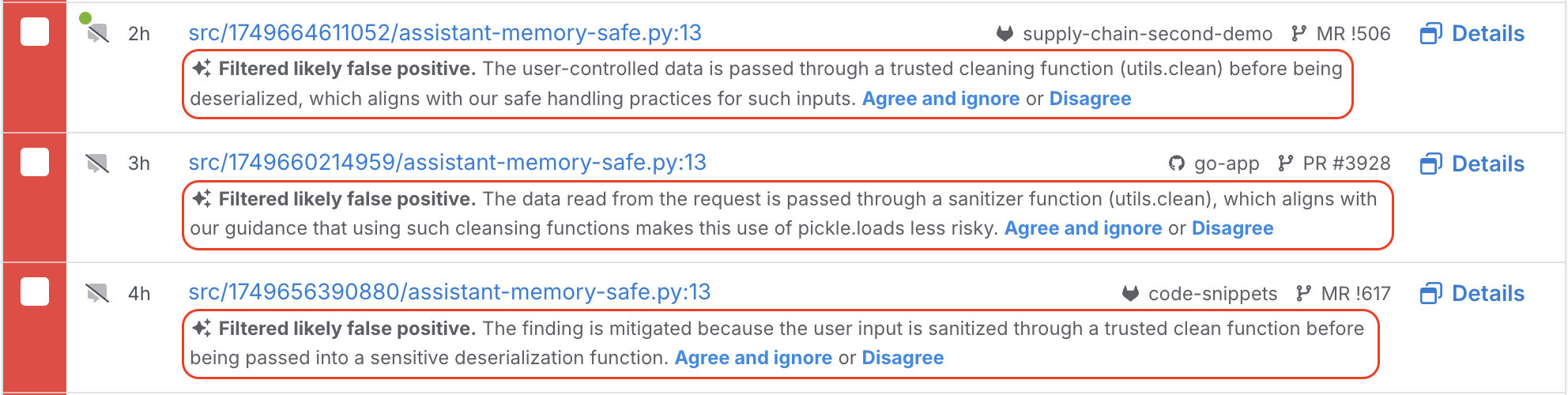

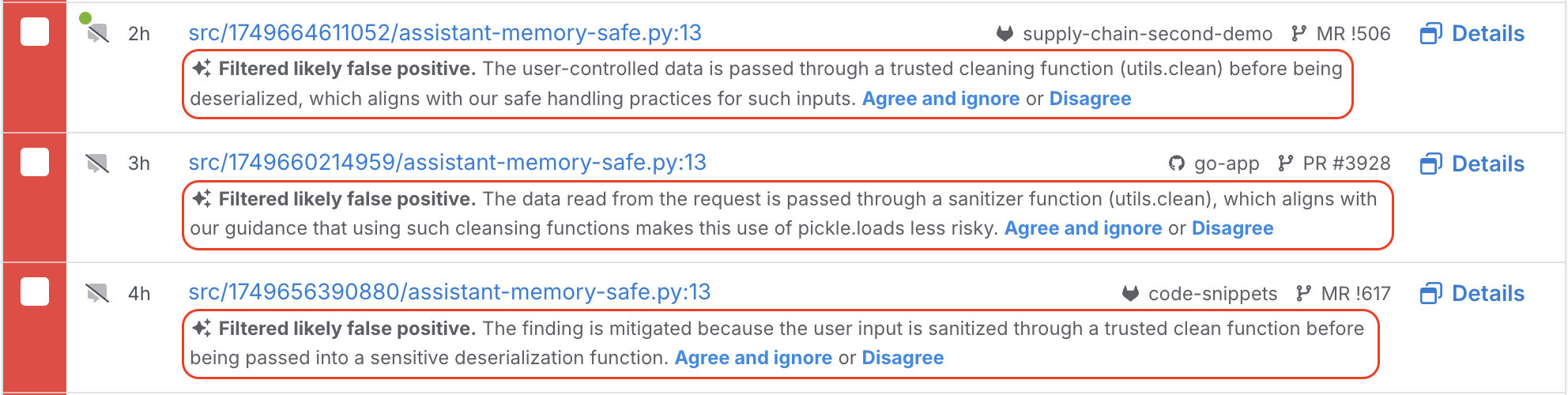

When Assistant filters out a false positive, it provides reasoning that references the memory if relevant. This makes the decision easy for users to spot check and validate.

What sets Memories apart from other security tools is measurable, compounding value that you can track over time. Users can see the exact impact of each memory and how many false positives it will eliminate in their production backlog. In addition, Assistant tracks all future instances where the memory is referenced and used to make a triage decision.

Memories turn manual triage into a high ROI activity that permanently reduces the number of irrelevant alerts developers and security folks see in the future.

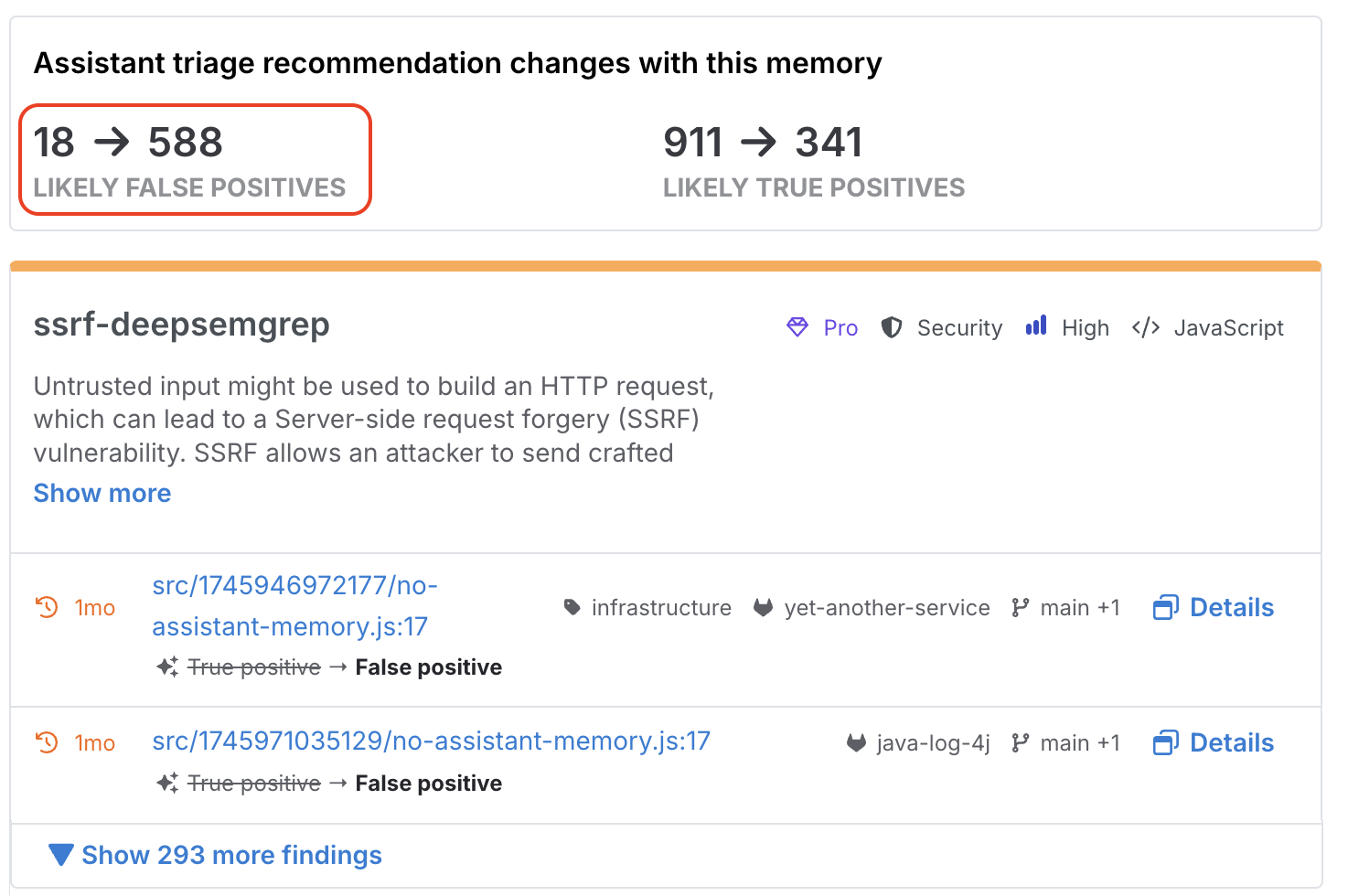

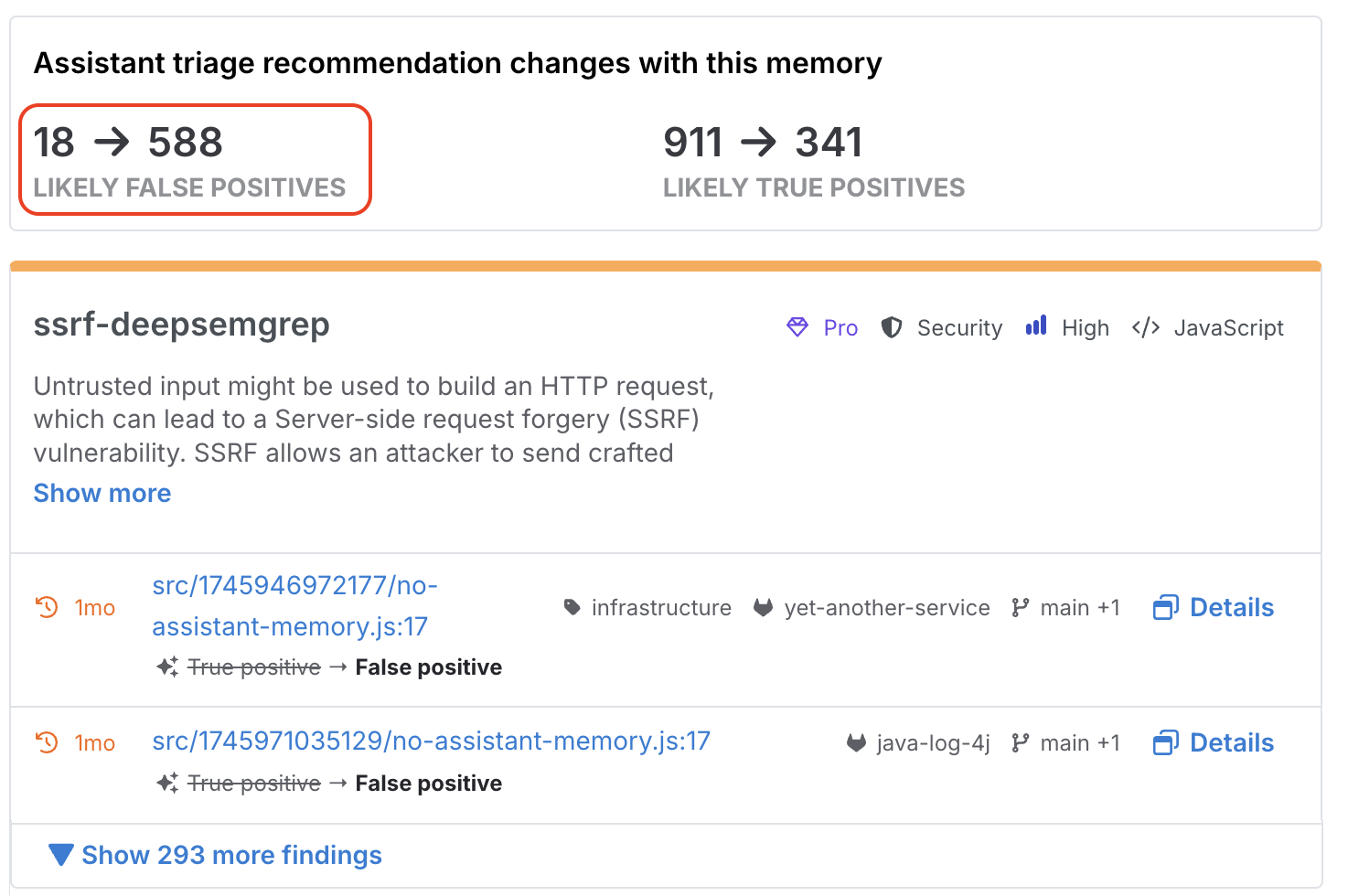

In this example, Semgrep suggests a memory scoped to a specific rule that’s returning too many findings. Without this memory, Assistant is only able to identify 18 false positives – but with the memory turned on, Assistant is able to filter out 588 false positives.

Memories as a foundation for AI

At Semgrep, we recognize that AI models are constantly getting better at understanding code. One thing almost everyone in AppSec can agree on is that AI feels inevitable, despite a lack of clarity into exactly how it will manifest. Because of this, we’re laser focused on building the right infrastructure around AI that gives it as much leverage as possible in security contexts.

We want Semgrep to be the foundation and scaffolding that lets any large language model do security related work 10x more effectively and 10x more reliably, regardless of the model's base capability.

Assistant Memories is an example of this approach - our platform gets smarter about your specific environment with each interaction, creating a compounding advantage that only grows stronger as foundational models do.

Another great example is our MCP server (and our Replit integration). It’s really, really cool to watch a language model generate code, call for a Semgrep scan, and then use the results to iterate and improve its output.

This architectural approach means our customers aren't just buying today's AI capabilities—they're investing in a platform that will continuously absorb and apply tomorrow's breakthroughs in AI. We've built infrastructure that automatically translates foundational model improvements into customer value: better coverage, lower noise, and vulnerability detection thats tailored to each organization.