Appsec Development: Keeping it all together at scale

Note: Clint Gibler helped in the brainstorming and editing of this post.

When I joined Snowflake ~2 years ago we were a medium sized startup, but well on our way to explosive growth over the next year. Until that point, threat models and security reviews were largely handled by a single person.

It did not take long for calendars to begin overflowing with security review meeting requests. Projects started becoming larger and much more complex to reason about. Soon they began to back up waiting for security review, delayed by weeks at a time.

Unsustainable

Unsustainable

The way we were doing things wasn’t going to work.

We knew we had to take decisive action.

Rather than just trying to keep up with the security review backlog, we needed to develop an approach that would be distributed and scalable.

In this article we’ll discuss:

Challenges security teams face with informal, centralized security review

Threat model and security review evidence that stands up to scrutiny

Surprising ways increasing scale can slow things down

Going fast by deciding when not to threat model, and what to do instead

Now the story of ~~a wealthy family who lost everything and the one son who had no choice but to keep them all together~~ a scrappy startup on its way to IPO, and the teams who had no choice but to scale their processes, together.

What didn’t work: Security engineers threat model every story

In the beginning, security review consisted of ad-hoc 30 minute meetings with project teams for most features they created. Many times, this occurred after code was already being written.

Sometimes the results were captured in Jira, but most of the time they were communicated verbally. Many decisions were forgotten or misinterpreted, making for frequent awkward discussions about blocked releases.

With evidence collection and coverage failing, we needed to bring sanity to the process before we could consider scale. Our first wins were simple:

Meetings with engineering teams to understand how they get work done, and where

Security review processes and deliverables that mostly fit into existing software development processes

Custom Jira ticket types and workflows for security review

Standardized Markdown templates for consultation, threat modeling, and penetration testing engagements

An approach familiar to developers: peer reviewed deliverables committed to source control (GitHub), and validation using a combination of test cases and penetration tests

Peer review by multiple software engineers, then security engineers

Pros & cons

Peer review created accountability and engagement. Templates helped security engineers provide consistent, robust evidence that could be referenced at any time, reducing those awkward release time surprises.

Our updated review process captured the right evidence and helped make the work more visible. Initially we did not even know how deep the backlog was. Now it was clear that we were not going to be able to keep up with demand. Projects quickly began seeing delays, weeks in many cases.

Realizations

We were happy, but developers were mostly unhappy

We were slowing the business down, and engineering teams were vocal in letting us know. They demanded the ability to unblock themselves by performing security reviews, and we wanted to give it to them. But there was still work to be done to get them ready.

Our strategy for scaling software security was (and is) not to hire an army of security engineers. Instead, we leverage our engineering culture and ownership values to make every software engineer successfully own the security of what they produce.

What didn’t work: Software engineers threat model every story

Having every software engineer successfully own security did not happen overnight.

We started with a well known approach: Security Champions. At Snowflake, we call them Security Partners.

These champions are security aware software engineer volunteers who function as a point of contact and domain expert within their team. At Snowflake scale, volunteerism was not going to be enough. Security was not optional, every team needed representation.

We met with engineers and engineering leadership with a clear ask:

At least 1 engineer on every team to function as a Security Partner

We also provided a clear set of requirements that describe the ideal Security Partner:

Strong engineering skills

Visibility into key projects

Influential team member

This criteria self-selected for senior engineers on each team who already felt a strong sense of ownership, including security. Now all we needed to do was show them how to do it.

Instead of threat modeling in isolation, Security Engineers began teaming up with Security Partners and Software Engineers on each threat model. Engineering teams became the owners of the process and deliverables while the product security team shifted to a facilitation role.

Pros & cons

We had the basics of scale now, and the results were night and day.

Engineering teams felt they were in control of their destiny and the backlog stabilized. We taught the basics of STRIDE-based threat modeling to Security Partners, and had the majority of teams producing models for every new feature. We facilitated sessions, and teams could continue iterating on their own.

This part felt really good. The backlog was so bad, the delays so bad, and the overall perception of security was so bad that being able to give software engineers the autonomy and scale they wanted felt great, and they really appreciated it.

It was still not enough (pro-tip: It will never be enough). The good feelings faded, and the cracks started to show. We had succeeded in moving from centralized delay, to localized delay.

Teams were spending between 2-6 hours on each threat model, in a large part because STRIDE per element threat modeling is more art than science. We went from a single informal 30 minute meeting for some projects, to multiple meetings where we coached teams to produce formal high quality threat models for every single project they worked on.

This began forcing teams to question whether or not they “had time for security”. They were annoyed when they had to threat model projects that were obviously low risk. Quality was inconsistent.

Realizations

We had to reduce the number of projects that needed STRIDE-based threat modeling and we needed to reduce the time the process added to each feature release.

We needed a lighter weight way to assess security risk.

What didn’t work: Software engineers assess risk on every story

People do not always have a good sense for what they want to do in life, but often have a clear idea of things they do not want to do.

Hot security review cornballer

Hot security review cornballer

The same is true for software engineers and security. They may not always know what the most secure way is, but they have an excellent sense for when security is simply getting in the way.

We realized software engineers were upset about threat modeling low risk features, and we knew we needed a way to identify these up front to bypass threat modeling.

Slack is fairly well known for creating goSDL, a web based questionnaire that helps developers determine the level of risk their project has by asking questions about what it does and what technology it uses. This guided approach seemed like a good fit, and the format was easy to adapt to our Snowflake specific use cases.

Our first set of questions looked like this:

Does this component make major changes or implement new authentication or security controls? (High risk)

Is this project highly important strategically? (High risk)

Is the target for this project internal-only or a prototype? (Low risk)

Is this a bug fix with no major impact on functionality? (Low risk)

Will this component access or store sensitive customer data? (High risk)

Will this component introduce new third party dependencies, software, or services? (High Risk)

Rather than threat model every story, we asked teams to first risk assess every story and threat model based on a ‘non low’ outcome.

Pros & cons

Our first minimum viable risk assessment template was intentionally calibrated to select high risk for almost everything. We took this approach because false positives on high risk would only mean we spent more time than we needed, whereas a false positive on low risk could mean we shipped a vulnerable project.

We also needed auditability such that any security or compliance stakeholder could review the and validate the risk assessment regardless of how technical they were. This meant questions could not be subjective, making them more challenging to come up with.

We were confident when risk was assessed as low that the feature could safely skip the threat modeling process, and the volume of threat models was reduced anecdotally by about 30%.

Our bet was that teams would give us the same feedback about risk assessment questions that they gave us about threat models, and that they would magically become customized and more relevant for each team.

We quickly learned that handing teams a minimum viable product and telling them: “It’s an MVP, it's intentionally painful. Go ahead and use it and customize it until it's not painful” rarely results in use, customization, or pain reduction.

This is classic "throwing it over the fence". It results in the perception that your process is hot garbage, slowing everything down.

When a software engineer feels they have to choose between doing ~~hot garbage~~ security and doing engineering, you have lost the battle.

Improving an MVP while trying to ship under pressure is difficult and creates tension.

A five minute risk assessment sounds great, until someone has to do 100 of them.

Realizations

We were still not asking the right questions to deselect all low risk things.

Taking this risk assessment MVP and making it something people like and use required much more engagement than we initially predicted. That forced us to double down on creating a robust security partner community.

We realized that we had to be in constant communication with software engineers and security partners so that we could catch them between and after projects, when the feedback was fresh and they had time to help make improvements.

With a focus on getting quality feedback, we started to realize there was something below low risk. There were work items like adding test cases and certain bug fixes, which had no security impact at all.

What started working

Many things had to come together to get to this point, but the key was ever increasing engagement between security engineers and the security partner community. It may seem obvious, but security teams have to listen to software engineers, rationalize their concerns, and give them what they need to go faster.

Do not force them to slow down with security.

Do not give them what you want.

This led us to introduce the concept of security impact, the most basic entrypoint into the review process. In our first version, if a software engineer was doing any of the following, we considered it to have no security impact:

Adding one or more new tests

Updating a .ref file

Adding comments to existing code

Adding or updating the following files:

CODEOWNERS

.gitignore

This meant the security review process now contained 3 main steps:

Security impact assessment

Risk assessment

Threat model

In the best case, you could exit after step 1. In the worst case, you were working on something truly risky and knew that threat modeling was necessary.

In addition to security impact, we pushed risk assessment further left to project planning.

Determining your project is high risk after committing timelines almost always negatively impacts delivery. We had to know risk was high before dates were committed so that the entire scope of security review could be factored in, including penetration testing.

Let's review: We started with just threat modeling, we added risk assessments, and we have been working on security impact assessments to help streamline our process even more. But, the missing piece was getting ahead of security during the overall project planning phase.

This brings us to our overall model:

Project Risk assessment: Helped teams manage risk to their timeline and helped ourselves schedule penetration tests

Security Impact Assessment: Let teams quickly exit the review process without the need for peer review

Risk Assessment: Identify potentially risky items with peer review

Threat Model: Analyze risky designs and create mitigations

We asked software engineers to complete these deliverables themselves, and to use their security partner as a mandatory peer reviewer. Security engineers only got involved on high risk items, or when teams needed help with a specific threat model or consultation.

Where we’re headed

With software engineers in the driver seat, we began asking ourselves how to make them more successful.

Approaches that work for us:

Quality SAST tools (like Semgrep) for software engineers that reduce the cognitive overhead of code reviews and increase the odds a bug will be detected, for example, by blocking known security anti-patterns, enforcing internal coding standards and best practices, etc.

Converting mitigations created by threat models into re-usable secure defaults. Never threat model the same thing twice.

Today we are still hard at work driving the barrier for security review down.

For example, software engineers still need to fix hundreds of bugs, and we still ask engineers to risk assess them all. This does not scale well, and we know there are many of these fixes that will not have any security impact.

The difference between when we started and today is that software engineers understand the burden of auditability we need to meet, and security engineers understand engagement is something that has to happen constantly and proactively.

A number of teams have worked with us to create security impact criteria that lets them flow 100s or even 1000s of issues through it without unnecessary effort. Those same teams have created fully customized risk assessments, and see threat modeling as a valuable exercise.

It is not perfect, but we created a community of security engineers, software engineers and security partners who understand the journey we are on, and we are on it together.

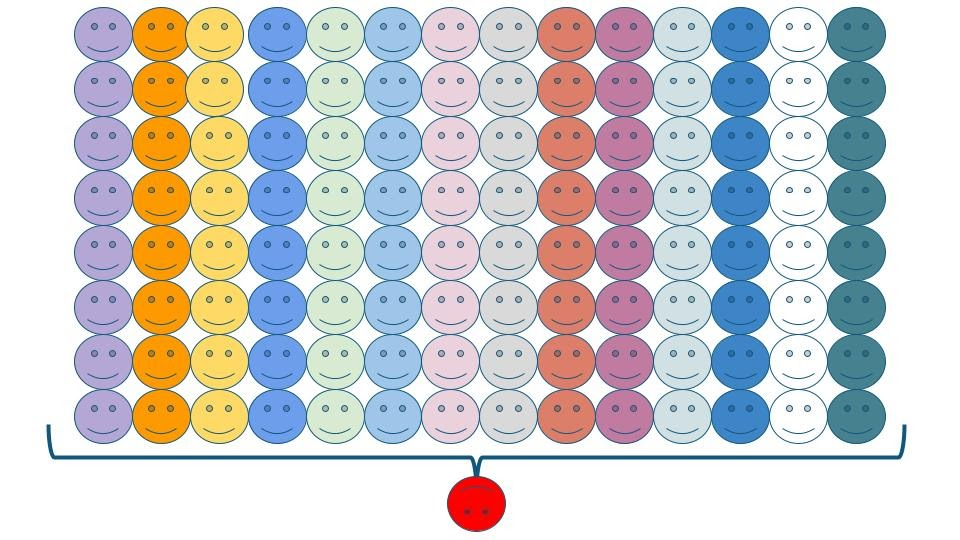

Smiling happy security partners and developers

Smiling happy security partners and developers

Key takeaways from this post

Scale is about trust and empowerment, not centralization and control. Make it clear that software engineers are responsible for the security of what they produce, then focus on enabling them. Basics like repeatable, robust evidence collection are prerequisites to scale.

When you are just getting started, always err toward high risk. Spending more time than needed on security review can be costly, but provides the opportunity to figure out how to avoid it next time. It also avoids shipping even costlier vulnerabilities.

Listen to software engineers, make them part of every decision. Figure out how to give them what they want while creating the accountability the business needs. Focus on creating great tools and excellent processes to make them successful.

As you grow, avoid threat modeling every feature, instead assess security impact and risk, then eliminate it for next time with secure defaults. Choosing what not to threat model, and having tools like Semgrep that allow you to create and express secure defaults are key to achieving scale.

Managing timeline risk is as important as managing security risk. Give engineering teams the tools to predict how long security reviews will take before they commit dates.